yolov11 安卓部署 2025最新

ncnn

前置:Android Studio 安装配置教程 - Windows(详细版)

2025年最新Android Studio汉化教程

https://github.com/sollyu/AndroidStudioChineseLanguagePack

Android部署自定义YOLOV8模型(2024.10.25)-全流程

基于YOLO模型的安卓手机部署

YOLOv11安卓端部署终极指南:从训练到NCNN移植,30分钟搞定手机实时检测!

ncnn-android-yolo11

如何构建和运行

步骤1

https://github.com/Tencent/ncnn/releases

下载 ncnn-YYYYMMDD-android-vulkan.zip 或自行构建 ncnn for android

将 ncnn-YYYYMMDD-android-vulkan.zip 解压到app/src/main/jni 目录下,并将app/src/main/jni/CMakeLists.txt中的ncnn_DIR路径修改为你自己的路径

步骤2

https://github.com/nihui/opencv-mobile

下载 opencv-mobile-XYZ-android.zip

将 opencv-mobile-XYZ-android.zip 解压到app/src/main/jni中,并将app/src/main/jni/CMakeLists.txt中的OpenCV_DIR路径更改为您的路径

步骤3

https://github.com/nihui/mesa-turnip-android-driver

下载 mesa-turnip-android-XYZ.zip

如果不存在,则创建目录app/src/main/jniLibs/arm64-v8a

libvulkan_freedreno.so将mesa-turnip-android-XYZ.zip解压到app/src/main/jniLibs/arm64-v8a中

步骤4

使用 Android Studio 打开此项目,构建它并享受它!

按照这个项目来就行了,然后的话就是ultralytics=8.3.39重新训练yolov11模型,要不然会导致没有输出框显示。

YOLO11 模型转换指南

这里暂时只针对detect也就是目标检测的模型

1 安装 以及 导出yolo11 torchscript

pip3 install -U ultralytics pnnx ncnn

yolo export model=yolo11n.pt format=torchscript

pnnx yolo11n.torchscript

# 分割模型

yolo export model=yolo11n-seg.pt format=torchscript

pnnx yolo11n-seg.torchscript

# obb

yolo export model=yolo11n-obb.pt format=torchscript

pnnx yolo11n-obb.torchscript

2 手动修改 pnnx 模型脚本以进行动态形状推断

编辑yolo11n_pnnx.py/ yolo11n_seg_pnnx.py/ yolo11n_pose_pnnx.py/yolo11n_obb_pnnx.py

- 修改重塑以支持动态图像大小

- 在连接之前排列张量并调整连接轴(较小张量上的排列速度更快)

- 放弃后处理部分(我们在外部实现后处理,以避免在阈值以下进行无效的边界框坐标计算,这样速度更快)

修改前

v_235 = v_204.view(1, 144, 6400)

v_236 = v_219.view(1, 144, 1600)

v_237 = v_234.view(1, 144, 400)

v_238 = torch.cat((v_235, v_236, v_237), dim=2)

# ...

修改后

v_235 = v_204.view(1, 144, -1).transpose(1, 2)

v_236 = v_219.view(1, 144, -1).transpose(1, 2)

v_237 = v_234.view(1, 144, -1).transpose(1, 2)

v_238 = torch.cat((v_235, v_236, v_237), dim=1)

return v_238

修改区域注意力以进行动态形状推理

修改前

# ...

v_95 = self.model_10_m_0_attn_qkv_conv(v_94)

v_96 = v_95.view(1, 2, 128, 1024)

v_97, v_98, v_99 = torch.split(tensor=v_96, dim=2, split_size_or_sections=(32,32,64))

v_100 = torch.transpose(input=v_97, dim0=-2, dim1=-1)

v_101 = torch.matmul(input=v_100, other=v_98)

v_102 = (v_101 * 0.176777)

v_103 = F.softmax(input=v_102, dim=-1)

v_104 = torch.transpose(input=v_103, dim0=-2, dim1=-1)

v_105 = torch.matmul(input=v_99, other=v_104)

v_106 = v_105.view(1, 128, 32, 32)

v_107 = v_99.reshape(1, 128, 32, 32)

v_108 = self.model_10_m_0_attn_pe_conv(v_107)

v_109 = (v_106 + v_108)

v_110 = self.model_10_m_0_attn_proj_conv(v_109)

# ...

修改后

# ...

v_95 = self.model_10_m_0_attn_qkv_conv(v_94)

v_96 = v_95.view(1, 2, 128, -1) # <--- This line, note this v_95

v_97, v_98, v_99 = torch.split(tensor=v_96, dim=2, split_size_or_sections=(32,32,64))

v_100 = torch.transpose(input=v_97, dim0=-2, dim1=-1)

v_101 = torch.matmul(input=v_100, other=v_98)

v_102 = (v_101 * 0.176777)

v_103 = F.softmax(input=v_102, dim=-1)

v_104 = torch.transpose(input=v_103, dim0=-2, dim1=-1)

v_105 = torch.matmul(input=v_99, other=v_104)

v_106 = v_105.view(1, 128, v_95.size(2), v_95.size(3)) # <--- This line

v_107 = v_99.reshape(1, 128, v_95.size(2), v_95.size(3)) # <--- This line

v_108 = self.model_10_m_0_attn_pe_conv(v_107)

v_109 = (v_106 + v_108)

v_110 = self.model_10_m_0_attn_proj_conv(v_109)

# ...

seg模型

修改前

v_202 = v_201.view(1, 32, 6400)

v_208 = v_207.view(1, 32, 1600)

v_214 = v_213.view(1, 32, 400)

v_215 = torch.cat((v_202, v_208, v_214), dim=2)

# ...

v_261 = v_230.view(1, 144, 6400)

v_262 = v_245.view(1, 144, 1600)

v_263 = v_260.view(1, 144, 400)

v_264 = torch.cat((v_261, v_262, v_263), dim=2)

# ...

v_285 = (v_284, v_196, )

return v_285

修改后

v_202 = v_201.view(1, 32, -1).transpose(1, 2)

v_208 = v_207.view(1, 32, -1).transpose(1, 2)

v_214 = v_213.view(1, 32, -1).transpose(1, 2)

v_215 = torch.cat((v_202, v_208, v_214), dim=1)

# ...

v_261 = v_230.view(1, 144, -1).transpose(1, 2)

v_262 = v_245.view(1, 144, -1).transpose(1, 2)

v_263 = v_260.view(1, 144, -1).transpose(1, 2)

v_264 = torch.cat((v_261, v_262, v_263), dim=1)

return v_264, v_215, v_196

修改区域注意力以进行动态形状推理

做跟检测一样的修改

修改前

# ...

v_95 = self.model_10_m_0_attn_qkv_conv(v_94)

v_96 = v_95.view(1, 2, 128, 1024)

v_97, v_98, v_99 = torch.split(tensor=v_96, dim=2, split_size_or_sections=(32,32,64))

v_100 = torch.transpose(input=v_97, dim0=-2, dim1=-1)

v_101 = torch.matmul(input=v_100, other=v_98)

v_102 = (v_101 * 0.176777)

v_103 = F.softmax(input=v_102, dim=-1)

v_104 = torch.transpose(input=v_103, dim0=-2, dim1=-1)

v_105 = torch.matmul(input=v_99, other=v_104)

v_106 = v_105.view(1, 128, 32, 32)

v_107 = v_99.reshape(1, 128, 32, 32)

v_108 = self.model_10_m_0_attn_pe_conv(v_107)

v_109 = (v_106 + v_108)

v_110 = self.model_10_m_0_attn_proj_conv(v_109)

# ...

修改后

# ...

v_95 = self.model_10_m_0_attn_qkv_conv(v_94)

v_96 = v_95.view(1, 2, 128, -1) # <--- This line, note this v_95

v_97, v_98, v_99 = torch.split(tensor=v_96, dim=2, split_size_or_sections=(32,32,64))

v_100 = torch.transpose(input=v_97, dim0=-2, dim1=-1)

v_101 = torch.matmul(input=v_100, other=v_98)

v_102 = (v_101 * 0.176777)

v_103 = F.softmax(input=v_102, dim=-1)

v_104 = torch.transpose(input=v_103, dim0=-2, dim1=-1)

v_105 = torch.matmul(input=v_99, other=v_104)

v_106 = v_105.view(1, 128, v_95.size(2), v_95.size(3)) # <--- This line

v_107 = v_99.reshape(1, 128, v_95.size(2), v_95.size(3)) # <--- This line

v_108 = self.model_10_m_0_attn_pe_conv(v_107)

v_109 = (v_106 + v_108)

v_110 = self.model_10_m_0_attn_proj_conv(v_109)

# ...

obb

修改前

v_195 = v_194.view(1, 1, 16384)

v_201 = v_200.view(1, 1, 4096)

v_207 = v_206.view(1, 1, 1024)

v_208 = torch.cat((v_195, v_201, v_207), dim=2)

# ...

v_256 = v_225.view(1, 79, 16384)

v_257 = v_240.view(1, 79, 4096)

v_258 = v_255.view(1, 79, 1024)

v_259 = torch.cat((v_256, v_257, v_258), dim=2)

# ...

修改后

v_195 = v_194.view(1, 1, -1).transpose(1, 2)

v_201 = v_200.view(1, 1, -1).transpose(1, 2)

v_207 = v_206.view(1, 1, -1).transpose(1, 2)

v_208 = torch.cat((v_195, v_201, v_207), dim=1)

# ...

v_256 = v_225.view(1, 79, -1).transpose(1, 2)

v_257 = v_240.view(1, 79, -1).transpose(1, 2)

v_258 = v_255.view(1, 79, -1).transpose(1, 2)

v_259 = torch.cat((v_256, v_257, v_258), dim=1)

return v_259, v_208

3 重新导出yolo11 torchscript

python

import yolo11n_pnnx

yolo11n_pnnx.export_torchscript()

python3 -c 'import yolo11n_pnnx; yolo11n_pnnx.export_torchscript()'

python3 -c 'import yolo11n_seg_pnnx; yolo11n_seg_pnnx.export_torchscript()'

python3 -c 'import yolo11n_pose_pnnx; yolo11n_pose_pnnx.export_torchscript()'

python3 -c 'import yolo11n_obb_pnnx; yolo11n_obb_pnnx.export_torchscript()'

4 转换具有动态形状的新 torchscript

pnnx yolo11n_pnnx.py.pt inputshape=[1,3,640,640] inputshape2=[1,3,320,320]

pnnx yolo11n_seg_pnnx.py.pt inputshape=[1,3,640,640] inputshape2=[1,3,320,320]

pnnx yolo11n_pose_pnnx.py.pt inputshape=[1,3,640,640] inputshape2=[1,3,320,320]

pnnx yolo11n_obb_pnnx.py.pt inputshape=[1,3,1024,1024] inputshape2=[1,3,512,512]

# 现在你得到了ncnn模型文件

mv yolo11n_pnnx.py.ncnn.param yolo11n.ncnn.param

mv yolo11n_pnnx.py.ncnn.bin yolo11n.ncnn.bin

# seg

mv yolo11n_seg_pnnx.py.ncnn.param yolo11n_seg.ncnn.param

mv yolo11n_seg_pnnx.py.ncnn.bin yolo11n_seg.ncnn.bin

# pose

mv yolo11n_pose_pnnx.py.ncnn.param yolo11n_pose.ncnn.param

mv yolo11n_pose_pnnx.py.ncnn.bin yolo11n_pose.ncnn.bin

# obb

mv yolo11n_obb_pnnx.py.ncnn.param yolo11n_obb.ncnn.param

mv yolo11n_obb_pnnx.py.ncnn.bin yolo11n_obb.ncnn.bin

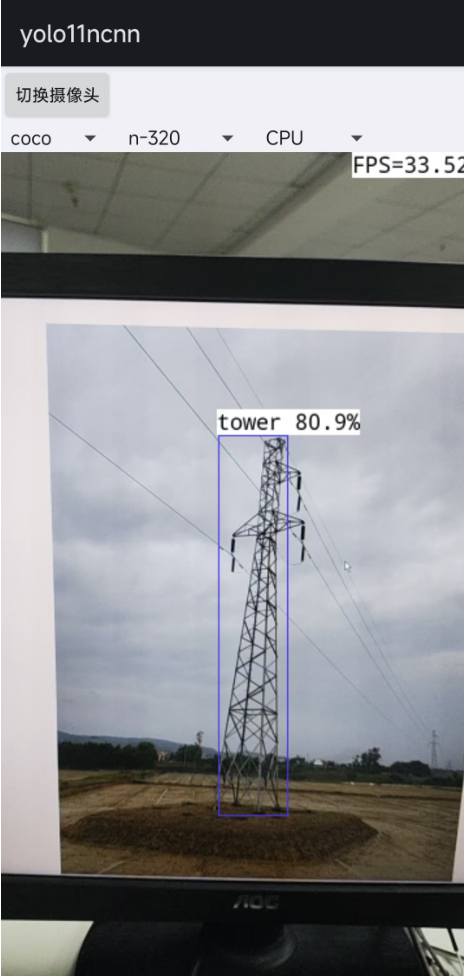

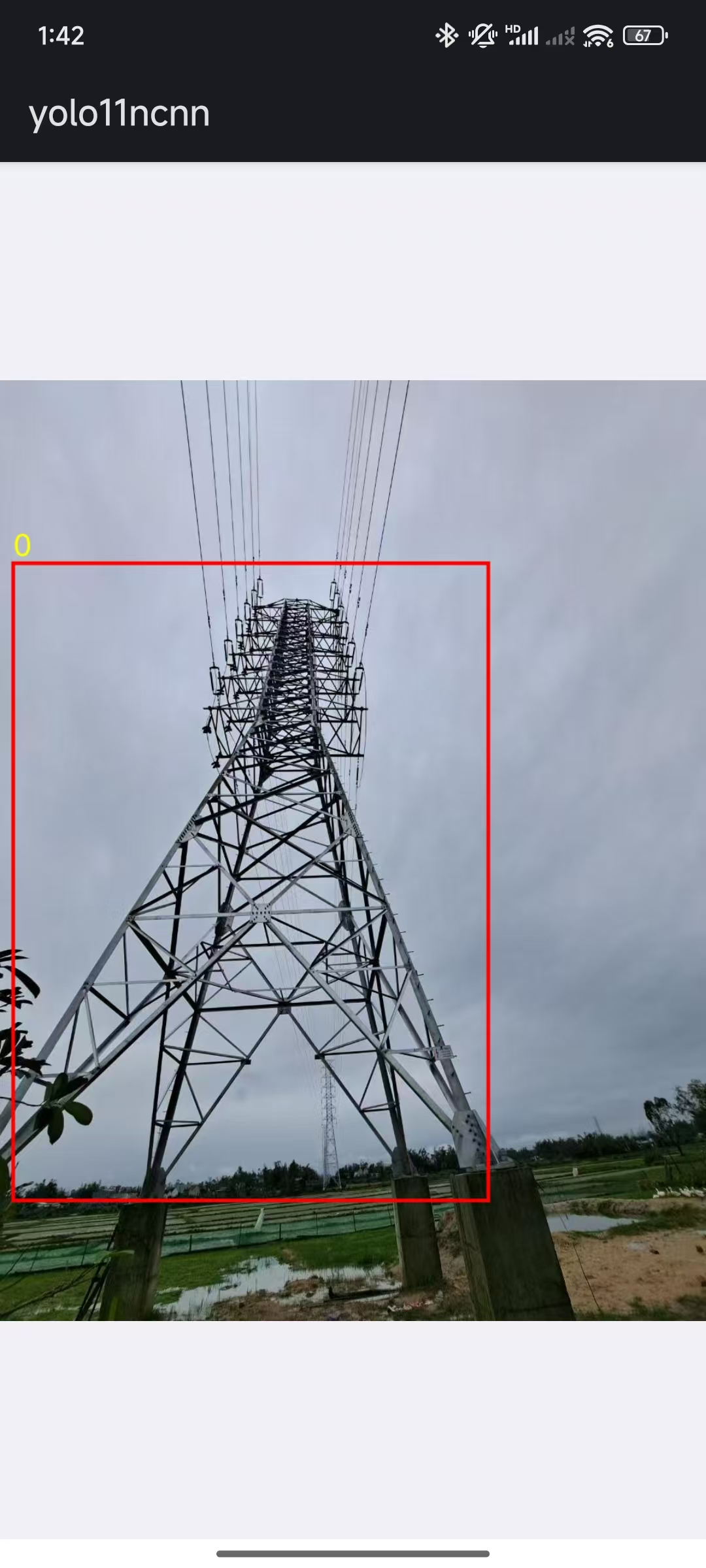

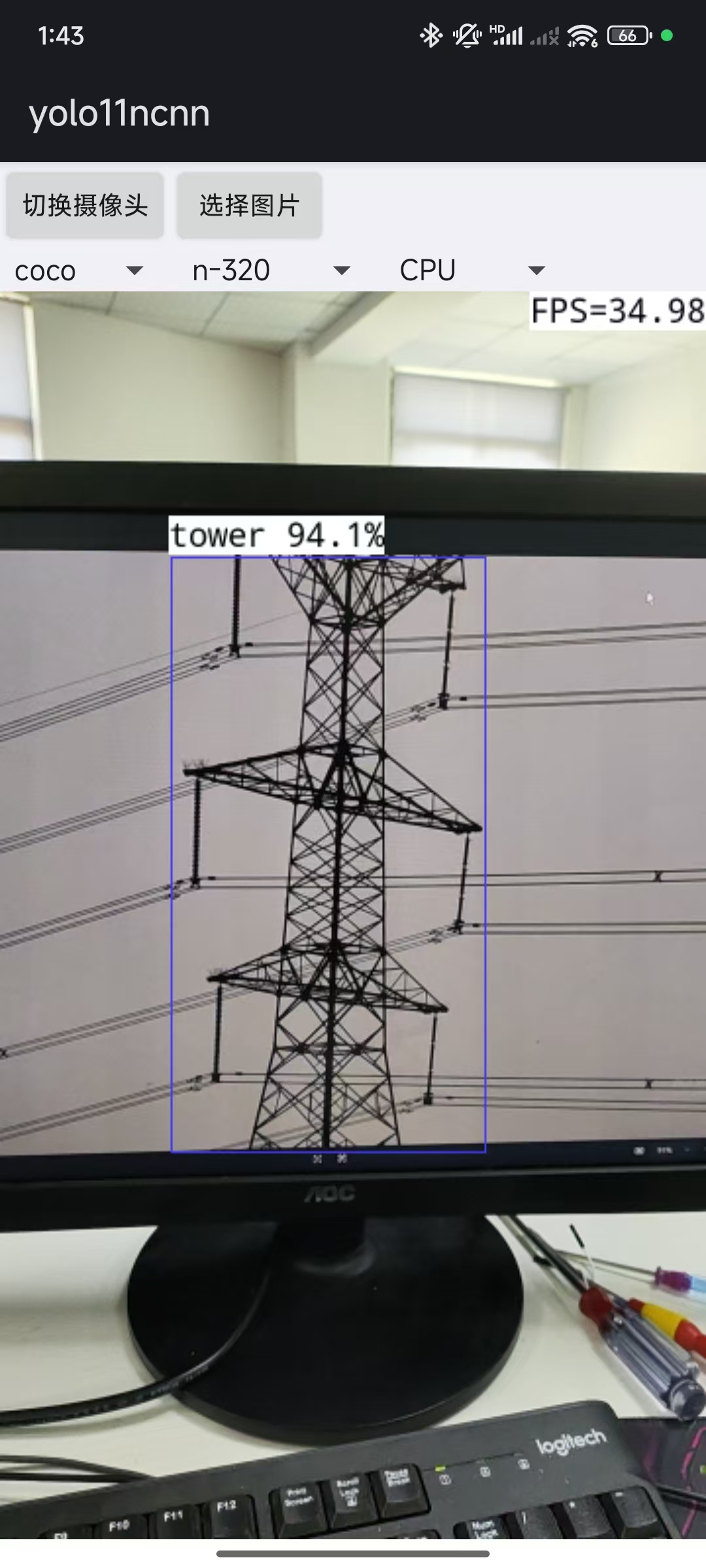

成功在我的小米手机和华为pad上实现杆塔的识别,华为pad的调用摄像头有点瑕疵。

pad调整显示的方向就ok了

增加选择相册图像进行识别

你的ultralytics训练版本是多少,我按照你的方法模型没有输出,我怀疑可能是ultralytics版本不一致

解决了,ultralytics=8.3.39重新训练,然后按照你的方法就能有输出的框了

转载自CSDN-专业IT技术社区

原文链接:https://blog.csdn.net/qq_40725313/article/details/149437391